The AT&T Debacle – A Cautionary Tale

April 12, 2009

A few days ago, AT&T announced the specifics on a trial of their new pricing program, and, in true AT&T fashion, continued their rape the American consumer in another attempt to keep us in their profitable technological dark age. I suppose that may be a little harsh, but hey, I am not so happy right now. I’m sure you will forgive me my moment of rage. So what is their new, creative pricing plan? Make you pay more (a lot more, of course) to get the service you have today.

Apparently, AT&T has decided that they are tired of people’s access to “unlimited” broadband services (godless freeloaders), so they have decided to start running trials in which users are charged by the gigabyte for Internet access. What this essentially means is that without any discernible increase in the cost of providing their service, they have taken it upon themselves to greatly increase the cost of their service to the average consumer. To get the same unlimited access that you’re paying 20-35 dollars a month for now, you will have to pay (at least) 150 dollars to AT&T for in the future. Let me repeat that: AT&T is implementing a 500% price hike for no apparent reason. Well, other than greed, that is.

Now, to be fair, AT&T has put forth a few arguments on why this price hike is necessary. The first and foremost among these arguments is that people are actually using the bandwidth that they paid for. And they can’t have that. They first tried to bump up their profit margins again by trying to force web companies like Google and Yahoo to pay more for all of their web traffic to be prioritized , a potentially disastrous proposal for the internet as a whole, and a definitive death blow to the cause of net neutrality. Unfortunately, the do-nothing attitude of Congress rejected the net neutrality bill that would have prevented such a thing from taking place, but at least Congress restrained itself from making the telecom’s brilliant idea law. Because of this setback, the AT&T and the telecoms were forced to go back to the drawing board. Looks like they’ve decided that if they can’t take from the provider side, they’re going to take from the consumer. And they want it all.

The second most quoted reason for the price hike is much more sickening, however. AT&T has gone around proudly declaring that they need the money to keep pace with technological innovation, so as to continue to provide their customers with “superior service.” Hrm…you mean like the $200 billion dollars in taxpayer money you and your telecom buddies were given back in the 90’s to achieve the goal of 86 million U.S. homes with symmetrical 46mbps internet connections by 2006? Or was that not quite enough for you? While they’ve sat counting the money they robbed from the American people, we have quickly slid from 1st worldwide in broadband penetration to 25th. And please, spare me the “we’re too large of a country, Japan has it so easy” rhetoric. In case you didn’t realize, Japan is a country about the size of California, and I don’t think that ANY Californians have yet to be blessed with the 100mbit/s internet connections that most Japanese citizens enjoy. Oh, and telecom companies? I’m still waiting for my $2000 refund check (or, preferably, my faster internet connection). And don’t think I’ll forget.

Their third line of reasoning is just silly. AT&T argues that because it worked in Europe (an arguable point) and on cell phones (a ridiculous point), it should now be the rule rather than the exception. I can’t help but wonder at how they decided that Europeans liked paying more for their internet connections. My guess is that their definition of “worked” is that people didn’t storm their corporate offices with pitchforks. Well, either that or the entire continent is comprised of masochists. You can decide which is the more likely scenario. But believe me, if I or any of my friends could have an unlimited 3G connection that didn’t cost an arm and a leg, we would subscribe in a heartbeat. However, providers just will not do that, regardless of the MASSIVE consumer interest in such a service. Why? They make more money per kilobyte when they charge by the kilobyte than when they give people an unlimited pass. It has nothing to do with need, or an increase in traffic, or a better way of thinking about providing internet service for consumers. It is about them padding their already enormous profits with more of your hard earned money.

I mentioned in the title of this post that this was a cautionary tale. I want to clarify what I mean by that. First, I want to caution the American people: if we continue to let the large corporations in this country dictate the progress of technological innovation for their own gain, we will fall further and further behind the rest of the world. Technology, with all its benefits, has made this country the great place that it is, and to let that slip away for the short-term profit of a wealthy few would be one of the worst decisions that we could make. The hard economic times that we are now in would devolve into something much worse without our technological upper-hand. Second, I want to caution the telecom companies, specifically AT&T: be careful on the ground on which you tread. You’ve already been lucky so far that the U.S. government has not taken action against you for your monopolistic business practices now and your blatant fraud back in the 90’s. Price gouging your customers to the point of ridiculousness while simultaneously stealing their tax dollars is not going to win you any friends. Eventually, your misdeeds and lies will come into the public light (probably after you pushed peoples’ pocketbooks just a bit too far), and people will be calling for heads to roll. When that happens, you’re going to need all the friends you can get.

</rant>

SSH: Secure Browsing Via SOCKS Proxy

April 10, 2009

It seems that not a week goes by any more that I don’t find some new, fun trick to do with SSH. A few weeks ago, I found one that to me has been especially useful.

I was sitting in the Tulsa International Airport, once again wishing that airports would just suck it up and provide free wireless access throughout their terminals. It’s a real pet peeve of mine, as layovers become incredibly more painful when I can’t waste away my time stumbling about the internet. I might even have to do something *shudder* productive…

Anyway, there I was, sipping some coffee and working on a project, when I noticed that there was an open wireless network available that was not one of those god forsaken Boingo hotspots. Being the curious person that I am, I decided to see if I could connect. Sure enough, it let me right on. Being the cautious person I am, I went to an HTTPS secured site to see what would happen. And sure enough, the normally valid certificate was invalid, pretty much guaranteeing someone was trying to listen in. I was still happy though, at least I still I had internet access and could keep myself mildly entertained with that.

However, I was feeling especially curious that day, so I decided to try to tunnel my traffic over SSH to a box back in my apartment, keeping my oh-so precious personal data away from prying eyes. Besides, beats working. After a little digging through man pages, this task, to my surprise, turned out to be much simpler than I had expected. All you need is one SSH command and an SSH server that you have access to and has forwarding enabled (the default OpenSSH installation on Ubuntu does).

If you don’t have an SSH server set up and you’re using Ubuntu at home, simply execute this on your home machine:

sudo apt-get install openssh-server

This will install and start the service. Make sure that a.) your user password is of decent strength (SSH is a common target for password bruteforcing) and b.) that you have port 22 forwarded on your router if you are behind a NAT so that you can access it from outside of your local network. The SSH client should already be installed on a default Ubuntu install (you can also do this using PuTTY on windows).

Once you have these two things ready, just open up terminal on your laptop/netbook/mobile device and type the following:

ssh -Nf -D randPortNum remote-username@ssh.server.com

Replace randPortNum with a port number of your choosing (something above 1024 if you are not root, which is probable), remote-username with your username on the remote system, and ssh.server.com with the hostname or IP address of your SSH server. If you are using your home server, I’d suggest using DynDNS to get a simple domain name to access it with. If you do not feel very comfortable with the command line, or you are lazy like me (I hate having to close the window after I’m done…), you can execute this command using Alt+F2, and the SSH client will prompt you for your password.

Now let me explain what exactly this command is doing. The N and f flags both specify that the command is to be forked into the background, so that you can do whatever you want after you execute it. Close the terminal, keep using it for something else, anything you please (just not killall ssh!). The D flag is the one doing the really interesting stuff: the OpenSSH developers decided it would be cool to put SOCKS proxy functionality straight into the client, and the D flag is how you access it. Basically, you are just telling SSH to start “local dynamic application-level port forwarding” (SOCKS proxy) from the specified port on your local machine to the remote host. Now, any program on your computer that supports SOCKS proxies will be able to connect to that port on your machine and have its traffic automagically forwarded (and encrypted!) across the internet to your remote machine, where it will then go out to its destination.

To add to it, tons of programs do support SOCKS proxies, more than you might think. Firefox, Opera, Pidgin, Deluge, Transmission (Tracker only), the list goes on. On top of that, using some programs (like tsocks) you can actually use any TCP based program over it. Very cool stuff.

To go ahead and encrypt your web traffic, open up Firefox (if you need Opera instructions, they’re probably very similar). Go to Edit->Preferences->Advanced->Network->Settings (Configure How Firefox Connects To The Internet) . Select “Manual proxy configuration”, enter “localhost” for your SOCKS host and the port number you chose earlier as your port. Either SOCKS 4 or 5 should work (I use 5). Now, it should look similar to the picture below:

Now just click OK, close out the Settings dialog, and you’re done! Go here and check it out, your IP is now the same as the remote host’s. If you’re really paranoid, you can also make Firefox tunnel your DNS queries over the proxy. This prevents the nameserver of the local network feeding you bad DNS information or keeping tabs on what you are viewing (you are still relying on the remote nameserver being trustworthy though :P) . To do this, open up a tab, enter the address “about:config”, search for “network.proxy.socks_remote_dns” and set it to true. And that’s it!

This trick can be immensely useful in many situations, from securing your traffic across untrusted local networks, to getting around packet shaping/filtering, to remaining anonymous online. I now use it all the time on my laptop, and very rarely trust the local network. A word of warning before I sign off though, I was lucky on that hotspot because the attacker was not trying to launch a MITM attack against my SSH traffic. If they had, the keys would not have matched my previous connection attempts to my SSH server, and I would have been warned in big bold letters that I was being listened in on, and the SSH client would have quit. In this situation, securing your traffic may be more difficult, but not impossible. I may post later on how one might go about this.

Anyway, hope someone else finds this as useful and interesting as I do. As always, feel free to ask if you have any questions.

UPDATE 04/15/2010: I have done a follow-up post to this article describing how you can use proxychains to allow any program that uses TCP sockets to tunnel traffic over SOCKS proxies, not just ones that have built-in proxy support. I also show how to chain multiple proxies together.

Ubuntu 8.10 on the Eee PC 1000

April 10, 2009

Wow, you know you haven’t posted in awhile when your intro paragraph to your next post talks about how Christmas went. In case anyone still cares now that it’s almost Easter, it went well. Very well. I still want to take this time to thank Santa for his enormous generosity this past year, as he was kind enough to get me that netbook that had been dancing around in my dreams for awhile: the Eee PC 1000.

I’ve spent the past few months playing around with my shiny new Eee PC, and I am duly impressed. Wireless N, 8GB SSD + 32GB built in flash, 7 (yes, count them, 7) hours of battery life, Bluetooth, webcam + mic, the list goes on and on. All of this technological goodness kept within a sleek, 12 inch wide frame that even Steve Jobs might not deem “junk”. Oh, and did I mention that all of this wonderful hardware has native Linux driver support? Can you say “portable hackstation”?

Yes, it was a good Christmas for this Linux user, and judging from the experience I had with the Eee PC 1000, it’s been a good year for Linux users in general. With netbooks being the fastest growing segments in the computing arena, Linux’s superior memory and power management, combined with it’s endless configurability and ever-improving usability, is starting to make Microsoft fear the penguin more than usual. This is not without reason: Ubuntu 8.10 has completed my netbook.

Now, before you all cry out in unison that I can get netbooks with Linux preinstalled, I know. In fact, mine came that way. However, the distribution that shipped with my Eee PC made it feel less like a computer and more like a toy, and a very useless one at that. I really hope that Asus wises up, and starts shipping something that isn’t intentionally crippled for some miguided notion of usability. I am thoroughly convinced that an install of Ubuntu would have been easier to use for anyone than that worthless POS that came preinstalled.

However, as great of a fit that the Ubuntu/Eee PC union is, it was not without some small hurdles to first overcome. The following is a short documentation of how to take your nifty new Eee PC and install the latest release of Ubuntu, Intrepid Ibex.

As I’m sure you’ve figured out, installing from CD isn’t going to work so well without a CD drive, so we first need to find another way to get Ubuntu onto the netbook. The easiest way to do this is with a flash drive. These are many ways to get Ubuntu on a flash drive, as documented here, but I will only be covering how I did it, using the installation tool built into Ubuntu. If you don’t have a flash drive, well, buy one. Seriously, it’s like 5 bucks.

Once you’ve gotten a hold of a flash drive, make sure you’ve backed up any important files, because we’re going to wipe it and put Ubuntu onto it. You are also going to need to get an ISO of the latest version of Ubuntu 8.10 (32 bit) from here. While that’s downloading, you might run off and get an ethernet cable if you don’t have it, you’ll need it later.

It should be mentioned at this point that there are lots of ready-made distros out there specifically for the Eee PC, including a number based off of Ubuntu. In addition, a default installation of Ubuntu does not have driver support enabled for all of the Eee PC components. However, these ready-made distributions strip out a lot of kernel features that you may need at some point, so for most users it’s a better idea to just install the standard edition and install a custom kernel. After all, it would be rather annoying if, for all the Eee PCs portable goodness, you plugged in some device that normally works under a standard Ubuntu 8.10 install only to find out that support for it has been removed. It’s better to at least have a backup of the original kernel, with all of its driver support, and then run a slimmed down version with the Eee PC drivers compiled in for day to day use. Now, I know what you’re thinking to yourself right now: “I have to replace my kernel just to get this working? What is this, Gentoo?” Do not fear, the Ubuntu community has your back, and has made this process a piece of cake.

Now that you have the ISO downloaded, we can move on to the fun part – installing it on a USB drive. If you already have Ubuntu installed on your desktop/laptop, then you’re all set to start. If not, you need to burn the ISO to a CD, and then boot into it before you can start. Once you have Ubuntu up and running, go to System -> Administration -> Create A USB Startup Disk. This will look slightly different on the Live CD, as you don’t have to select an ISO (it uses itself), but the concept is the same:

Now, simply select the ISO file that you downloaded, the USB drive that you want to install, and click “Make Startup Disk”. Go get yourself something to eat, as this can take awhile, depending on the speed of the disk.

You should now have a bootable USB drive with Ubuntu 8.10 installed, congratualtions! You’re well on our way to having it up and running. Now, go ahead plug it into your Eee PC and power it up. You may need to set the USB drive as the default boot device in the BIOS, so it’s best to check. F2 at the bootup screen does the trick. For some reason, my Eee PC reports USB drives as hard drives, so I would check to make sure that USB is first in the Hard Disk boot priority list.

Once you’ve booted up into Ubuntu using the USB drive, simply install Ubuntu as you normally would, by clicking the Install icon on the desktop and following the prompts. Make sure that your 8GB partition is the one that your root partition is installed to, not doing so will result in slow performance and possibly data loss later on.

Restart, and you’re almost done! Hook up your Eee PC to a wired connection (your wireless most likely won’t work), and follow these instructions to install the custom Eee kernel.

That’s it! I hope you all have found this informative, and I know you will all enjoy Ubuntu on your Eee PC as much as I have.

If you want some tips on configuring your Ubuntu install to deal with the small screen, please see the Ubuntu wiki. Its tips really helped me, and I’m sure they will be of use to all of you as well.

Compulsive Slashdot Reading

April 10, 2009

And they said it would never pay off…:P . But in all seriousness, welcome to all who have now blindly stumbled upon my tiny blog in the middle of the vast sea of cyberspace. Because of the copious amounts of schoolwork and research I’ve had on my plate the past few months, I haven’t set nearly enough time aside to update this blog. This saddens me greatly, so I’m going to begin a renewed effort to start posting my musings concerning technology and such again, and hopefully some of you might be able to glean a few pieces of advice and wisdom out of my incessant babbling. Now for something to write about… I guess I’ll have to see what sets my fingers typing next.

Until then, peace.

Google Releases Free Texting Feature

December 21, 2008

I have to say, I heard about this one coming a few weeks ago and I was quite excited. And now it’s here! Google didn’t disappoint either.

Google’s texting service has pretty much everything I had hoped for, delivered of course with their trademark simplicity. Text messages are sent the same way as chats, simply find the person in your contacts list and, if you have their number stored, select “Send SMS”. Then just type your message, press enter, and off it goes.

In my tests, the messages were all delivered promptly, very little delay. This is a very big plus, as many other free services are slower than Han Solo post-carbonite. Replies also arrive promptly, making it a great, cheap way to have text convos with your friends who have more of a life than you do.

Oh yeah, did I mention the replies? Google assigns anyone who uses their service a unique number, meaning that your friends can reply to any free texts you send, and they get sent straight to your inbox. As I’m on my computer almost as much as I’m away from it, so it is incredibly convenient for me to be able to text friends/family from my browser. And, for those few times that I’m not, it’s saved like any other email or chat message, waiting for me when I return from what was most likely a bathroom trip.

I’ve messed with few free texting services in the past, but most of those experiences consisted of me reading their TOS page and then running away quickly. Enter Google. Their strategy to passively gather information on me and serve me targeted ads in my inbox, while still more than a little unnerving, is miles better than having someone constantly spamming my phone with MMS pron.

Altogether, this is a great new feature, and I really hope that Google decides to run with it. For all of you wondering how to enable this handy service, go to Settings -> Labs in Gmail, and simply enable Text Messaging (SMS) in Chat. I hope everyone finds this as useful as I have!

N.B. I am not actually a Google marketing person, as much as I may sound like one in this post. I was excited, give me a break:P

Handbrake 0.9.3 – DVD Ripping on Ubuntu

December 11, 2008

As most of my friends can attest, I am very big on making backups of my DVDs, so much so that I rarely pull them out of their case except to make rips of them. I tend to break/scratch discs like none other, so I make it a point to have backups. I am very hard to please when it comes to ripping programs, as I both want to be able to tweak advanced settings to my liking, but also to just be able to throw something in and go. Needless to say, I want the rips to be high quality. I generally use x264 video and AC3 audio muxed into an MKV container, and I’ve found this to be a very good combination. I have tried pretty much every tool that I can find out there to do this for me: dvd::rip, OGMRip, and acidrip to name a few, but have still always fallen back to using a collection of custom CLI scripts that I put together to rip and encode them automatically. I’ve even toyed around with the idea of creating my own GUI, to attempt to fill a rather gaping void of decent ripping programs, but unfortunately not had the time.

Thankfully, this will no longer be necessary. I have just tested the latest Handbrake release for Linux, and I have to say, these guys have outdone themselves. When I last tried Handbrake, it was simply a CLI version on Linux, and a rather bad one at that. My direct mencoder invocations consistently performed better than their command line program’s calls, a reason alone to move on. Beyond that, it was just hard to use, and if I was doing command line, I might as well just use mencoder. Not so anymore with the release of their latest GUI. It’s a GTK frontend, which really does make encoding as simple as point and click. Now Handbrake has long been a favorite on Windows, so this may not come as a surprise to some, but I really was not expecting this kind of release for Linux from them. Kudos.

The following is a short tutorial on how to set up and use the new Handbrake GUI on Ubuntu:

Some people in Ubuntu forums have thankfully set up a PPA repository of Handbrake to make it easier to install. To install Handbrake on Ubuntu do the following:

sudo gedit /etc/apt/sources.list

Now, you need to copy the lines below into it. If you are on Intrepid, change “hardy” to “intrepid”.

deb http://ppa.launchpad.net/handbrake-ubuntu/ubuntu hardy main deb-src http://ppa.launchpad.net/handbrake-ubuntu/ubuntu hardy main

Once you’re done, save and close. Now, reload your repositories and install handbrake:

sudo apt-get update sudo apt-get install handbrake-gtk

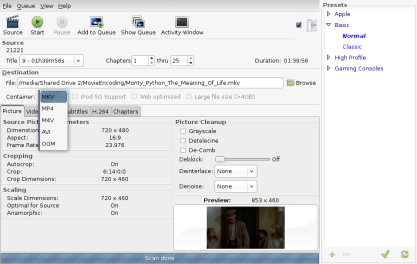

Now Handbrake should be installed. If you’re going to be ripping DVD’s, this tutorial assumes that you have libdvdcss installed. You can grab it off of Medibuntu (help.ubuntu.com) if you don’t. Go to the Sound and Video tab in your menu, and select Handbrake. Alternately, you can just type “ghb” into the command line to start up the GUI. If you are having problems that you need to debug, this may be useful as well. Now you should see the following screen:

As you can see, the GUI is laid out pretty simply. A number of pre-made profiles are there for your convenience on the right side, with profiles for everything from iPods to movies to Xbox 360s. To begin your rip, insert a DVD. Click the Source button and just select the DVD drive that you’ve put the disc into.

Check to make sure that the correct title that you want was selected, there should be a preview at the bottom. It seems to just select the longest title available. If it is not the one you want, simply select what title/chapters you wish to rip to your file. If you want to rip something such as a TV show season (meaning you want seperate files for each episode), you will need to add each title to the queue individually AFAIK.

Choose where you want to save this file. Then, select the file container you want. I personally would recommend the MKV format, as an open source and completely free container, but depending on what you are using your rip for you may not be able to do this. Regardless, there are plenty of options for your container.

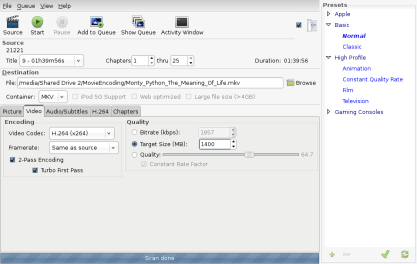

All that’s left now is to change the video/audio encoding settings to your liking. You can essentially configure this as much or as little as you’d like to. If you want subtitles included, make sure that the proper ones are selected in the Audio/Subtitles tab. For me, making rips of DVDs is perfectly managed by the High Profile -> Film profile, with a few small tweaks. One thing I would recommend doing is setting your bitrate/final file size in the video tab. I usually go for a 1.4GB file when using h.264 + AC3 5.1 , but again, I go for high quality, you would be perfectly fine going with something lower.

Once you’ve configured everything to your liking, just click Start. If you want to add other movies, click Add To Queue, but do remember that you can only have one DVD in your drive at a time.

I hope this was helpful to someone out there struggling with encoding DVDs on Linux. If there are any errors in the above post, or anything you would like my to expand upon, feel free to let me know, I would be glad to help out anyone who’s having trouble. If you are having problems, this forum discussion (ubuntuforums.org) might be of help as well.

UPDATE: I have now also posted a guide on how to restore these rips to DVDs. Hope it’s useful.

And So It Begins…

December 6, 2008

Well, it’s 4 in the morning, and here I am starting a blog. Really should get to bed soon, but I feel the (not so) strange urge to start writing. So here I am. I’ve wanted to start a blog for awhile now, but I’ve never really gotten up the time or energy to do so. Guess there’s no better time than right now.

I’m mainly creating this blog as a place to voice my completely insignificant thoughts on what matters to me. I considered listing them here, but a.) I don’t want to pigeonhole my focus, and b.) it’s hard to break all of those things up into categories, as they overlap pretty often. So for now, we’ll just say this is a technology blog with a smattering of everything else mixed in, and see what comes from there.

Well, they say they say a journey of a thousand miles begins with a single step. I think I will make mine towards my bed. Maybe next time I will post with something of a little more substance. Adios.